64 Cores of Rendering Madness: The AMD Threadripper Pro 3995WX Review

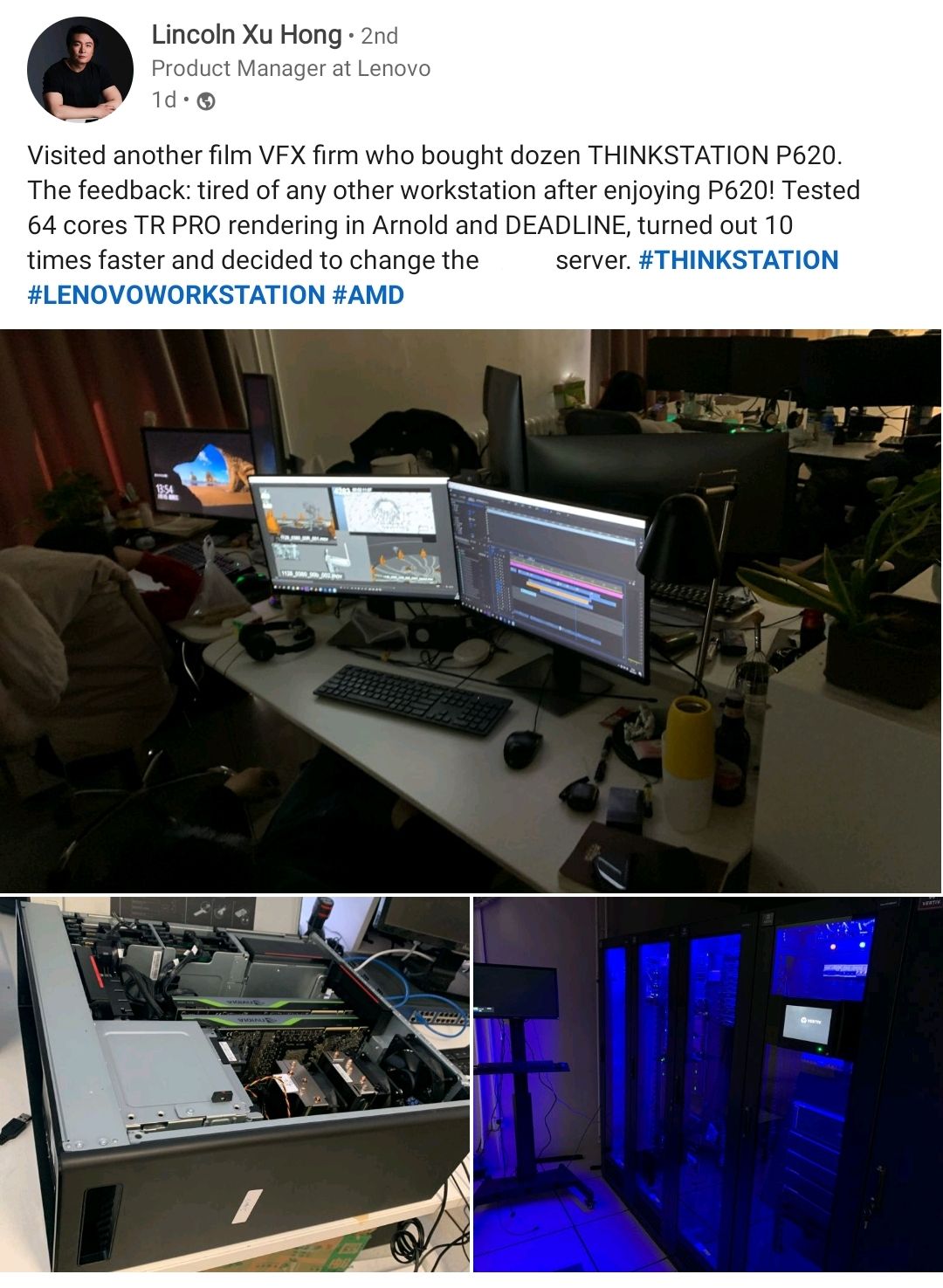

Knowing your market is a key fundamental of product planning, marketing, and distribution. There’s no point creating a product with no market, or finding you have something amazing but offer it to the wrong sort of customers. When AMD started offering high-core count Threadripper processors, the one market that took as many as they could get was the graphics design business – visual effects companies and those focused on rendering loved the core count, the memory support, all the PCIe lanes, and the price. But if there’s one thing more performance brings, it’s the desire for even more performance. Enter Threadripper Pro.

computational graphics goes brrrrrrr

There are a number of industries that, when looking from the outside, an enthusiast might assume that using a CPU is probably old fashioned – the question is asked as to why hasn’t that industry moved fully to using GPU accelerators? One of the big ones is machine learning – despite the push to dedicated machine learning hardware and lots of big businesses doing ML on GPUs, most machine learning today is still done on CPUs. The same is still true with graphics and visual effects.

The reason behind this typically comes down to the software packages in use, and the programmers in charge.

Developing software for CPUs is easy, because that is what most people are trained on. Optimization packages for CPUs are well established, and even for upcoming specialist instructions, these can be developed in simulated environments. A CPU is designed to handle almost anything thrown at it, even super bad code.

By contrast, GPU compute is harder. It isn’t as difficult as it used to be, as there are wide arrays of libraries that enable GPU compilation without having to know too much about how to program for a GPU, however the difficulty lies in architecting the workload to take advantage of what a GPU has to offer. A GPU is a massive engine that performs the same operation to hundreds of parallel threads at the same time – it also has a very small cache and accesses to GPU memory are long, so that latency is hidden by having even more threads in flight at once. If the compute part of the software isn’t amenable to that sort of workload, such as being structurally more linear, then spending 6 months redeveloping for a GPU is a wasted effort. Or even if the math works out better on GPU, trying to rebuild a 20-year old codebase (or older) for GPUs still requires a substantial undertaking by a group of experts.

GPU compute is coming on leaps and bounds ever since I did it in the late 2000s. But the fact remains is that there are still a number of industries that are a mix of CPU/GPU throughput. These include machine learning, oil and gas, financial, medical, and the one we’re focusing on today is visual effects.

A visual effects design and rendering workload is a complex mix of dedicated software platforms and plugins. Software like Cinema4D, Blender, Maya, and others rely on the GPU to showcase a partially rendered scene for these artists to work on in real time, also relying on strong single core performance, but the bulk of compute for the final render will depend on what plugins are being used for that particular product. Some plugins are GPU accelerated, such as Blender Cycles, and the move to more GPU-accelerated workloads is taking its time – ray tracing accelerated design is an area that is getting a lot of GPU attention, for example.

There are always questions as to which method produces the best image – there’s no point using a GPU to accelerate the rendering time if it adds additional noise or reduces the quality. A film studio is more than likely to prioritize a slow higher-quality render on CPUs than a fast noisy one on GPUs, or alternatively, render a lower resolution image and then upscale with trained AI. Based on our conversations with OEMs that supply the industry, we've been told that a number of studios will outright say that rendering their workflow on a CPU is the only way they do it. The other angle is memory, as the right CPU can have 256 GB to 4 TB of DRAM available, whereas the best GPUs can only supply 80 GB (and those are the super expensive ones).

The point I’m making here is that VFX studios still prefer CPU compute, and the more the better. When AMD launched its new Zen-based processors, particularly the 32 and 64 core count models, these were immediately earmarked as potential replacements for the Xeons being used in these VFX studios. AMD’s parts prioritized FP compute, a key element in VFX design, and having double the cores per socket was also a winner, combined with the large amount of cache per core. This latter part meant that even though the first high-core count parts had a non-uniform memory architecture, it wasn’t as much of an issue as with some other compute processes.

A number of VFX companies as far as we understand focused on AMD’s Threadripper platform over the corresponding EPYC. When both of these parts first arrived to market, it was very easy for VFX studios to invest in under-the-desk workstations built on Threadripper, while EPYC was more for the server rack installations and not so much for workstations. Roll around to Threadripper 3000, and EPYC 7002, and now there are 64 cores, 64 PCIe 4.0 lanes, and lots of choice. VFX studios still went for Threadripper, mostly due to offering higher power 280 W in something that could easily be sourced by system integrators like Armari that specialize in high-compute under-desk systems. They also asked AMD for more.

AMD has now rolled out its Threadripper Pro platform, addressing some of these requirements. While VFX is always core compute focused, the TR Pro now gives double the PCIe lanes, double the memory bandwidth, support for up to 2TB of memory, and Pro-level admin support. These PCIe lanes could be extended to local storage (always important in VFX) as well as large RAMDisks, and the admin support through DASH helps keep the company systems managed together appropriately. AMD’s Memory Guard is also in its Pro line of parts, which is designed to enable full memory encryption.

Beyond VFX, AMD has cited world leadership compute with TR Pro for product engineering with Creo, 3D visualization with KeyShot, model design in architecture with Autodesk Revit, and data science, such as oil and gas dataset analysis, where the datasets are growing into the hundreds of GB and require substantial compute support.

Threadripper Pro vs Workstation EPYC (WEPYC)

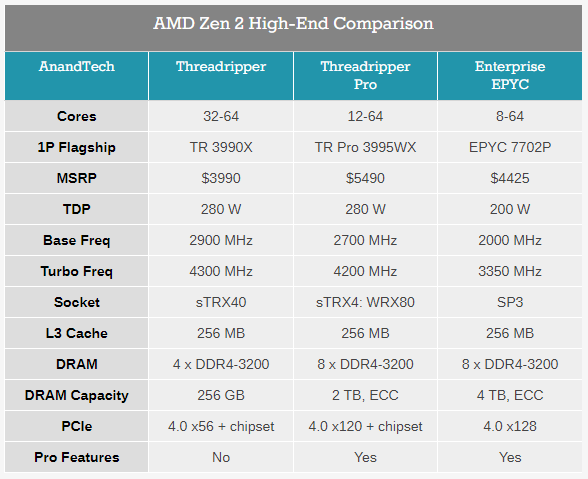

Looking at the benefits that these new processors provide, it’s clear to see that these are more Workstation-style EPYC parts than ‘enhanced’ Threadrippers. Here’s a breakdown:

To get these new parts starting from EPYC, all AMD had to do was raise the TDP to 280 W, and cut the DRAM support. If we start from a Threadripper base, there are 3-4 substantial changes. So why is this called Threadripper Pro, and not Workstation EPYC?

We come back to the VFX studios again. Having already bought in to the Threadripper branding and way of thinking, keeping these parts as Threadripper helps smooth that transition – this vertical had kind of already said they preferred Threadripper over EPYC, from what we are told, and so keeping the naming consistent means that there is no real re-education to do.

The other element is that the EPYC processor line is somewhat fractured: there are standard versions, high performance H models, high frequency F models, and then a series of custom designs under B, V, and others for specific customers. By keeping this new line as Threadripper Pro, it keeps it all under one umbrella.

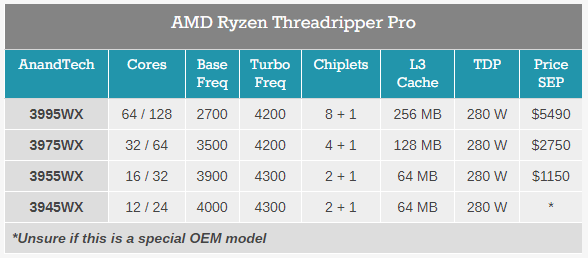

Threadripper Pro Offerings: 12 core to 64 core

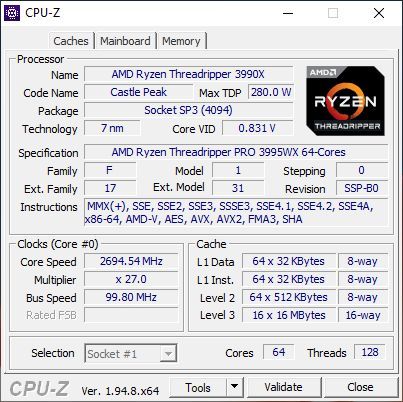

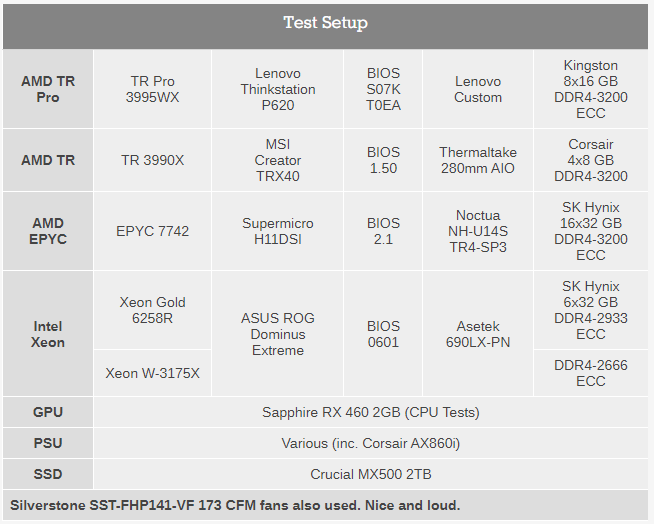

AMD announced these processors in the middle of last year, along with the Lenovo Thinkstation P620 as being the launch platform. From my experience, the Thinkstation line is very well designed, and we’re testing our 3995WX in a P620 today.

When TR Pro was announced with Lenovo, we weren’t sure if any other OEM would have access to Threadripper. When we asked OEMs earlier in that year about it, before we even knew if TR Pro was a real thing, they stated that AMD hadn’t even marked the platform on their roadmap, which we reported at the time. We have since learned that Lenovo had the 6-month exclusive, and information was only supplied to other vendors (ASUS, GIGABYTE, Supermicro) after it had been announced.

To that end, AMD has since announced that Threadripper Pro is coming to retail, both for other OEMs to design systems, or for end-users to build their own. Despite using the same LGA4094 socket as the other Threadripper and EPYC processors, TR Pro will be locked down to WRX80 motherboards. We currently know of three, such as the Supermicro and GIGABYTE models, plus we have also had the ASUS Pro WS WRX80E-SAGE SE Wi-Fi model in house for a short hands-on, although we weren’t able to test it.

Of the four processors listed above, the top three are going on sale. It’s worth noting that only the 64-core comes with 256 MB of L3 cache, while the 32-core comes with 128 MB of L3. AMD has kept that these chiplet designs only use as many chipsets as is absolutely necessary, keeping L3 cache per core consistent as well as the 8-cores per chiplet (the EPYC product line varies this a bit).

The fourth processor, the 12-core, would appear to be an OEM-only specific processor for prebuilt systems.

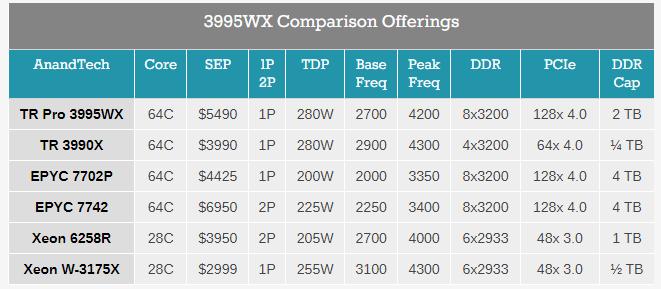

Threadripper Pro versus The World

These Threadripper Pro offerings are designed to compete against two segments: first is AMD themselves, showcasing anyone who is using a high-end professional system built on first generation Zen hardware that there is a lot of performance to be had. The second is against Intel workstation customers, either using single socket Xeon W (which tops out at 28 cores), or a dual socket Xeon system that costs more or uses a lot more power, just because it is dual socket, but also has a non-uniform memory architecture.

We have almost all these in this test (we don't have the 7702P, but we do have the 7742), and realistically these are the only processors that should be considered if the 3995WX is an option for you:

Intel tops out at 28 cores, and there is no getting around that. Technically Intel has the AP processor line that goes up to 56 cores, however these are for specialist systems and we haven’t had one physically sent to us for testing. Those are also $20k+ per CPU, and are two CPUs in the same system bolted under one package.

The AMD comparison points are the best Threadripper option and the best available EPYC Processor, albeit the 2P version. The best comparison here would be the 7702P, the single socket variant and much more price competitive, however we haven’t got this in for testing, instead we have AMD's EPYC 7742, which is the dual socket version but slightly higher performance.

© 2025 Zeon Technology