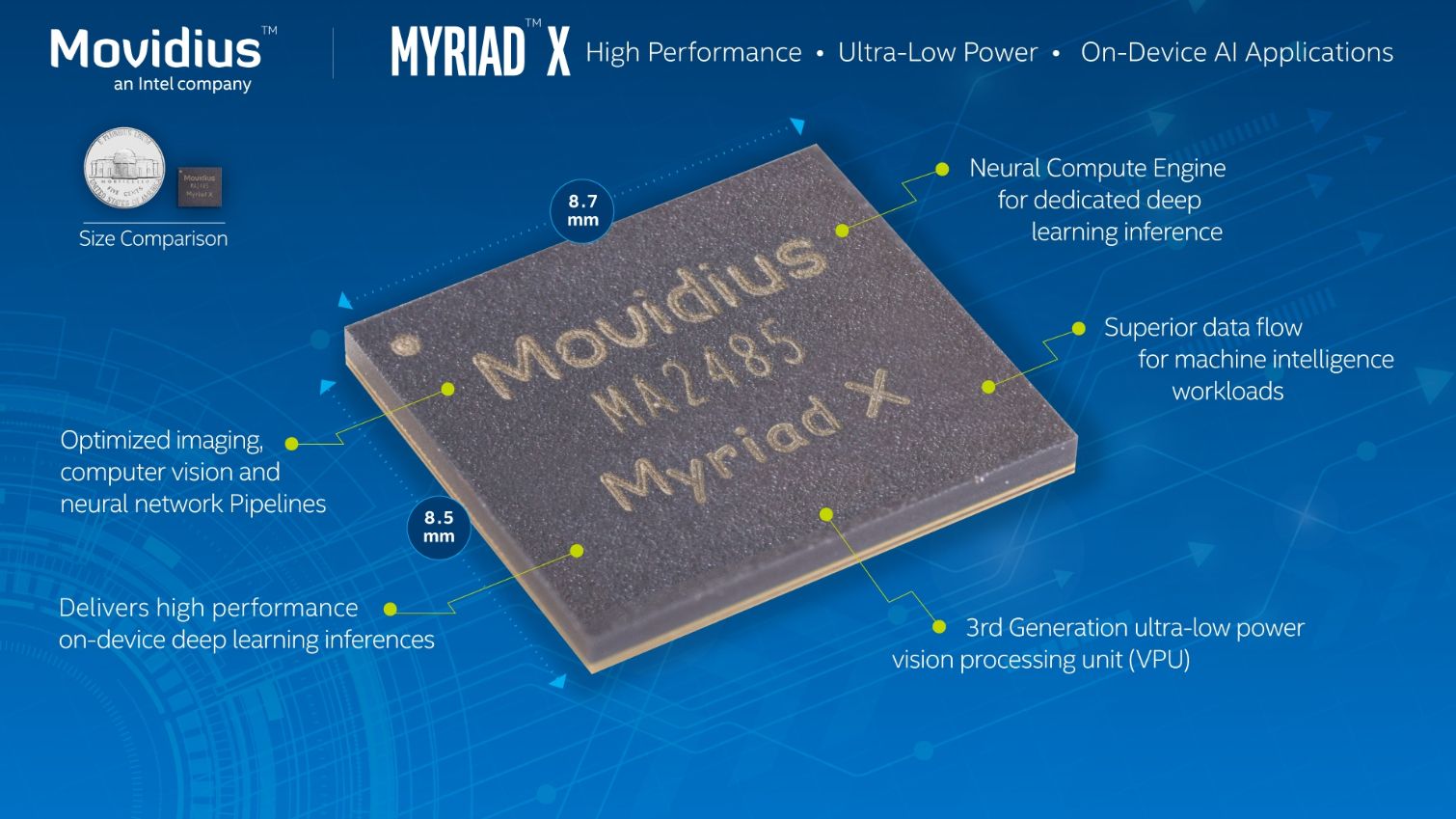

Intel Unveils Movidius Myriad X Vision Processing Unit

Movidius, now an Intel company, announced the Myriad X VPU, which the company claims is the world's first Vision Processing Unit (VPU) with a dedicated Neural Compute Engine.

Movidius aims to infuse AI capabilities into everyday devices through several of its initiatives. AI is broken up into two steps: Deep learning consists of feeding data into a machine learning algorithm so it can train itself to accomplish a task, such as identify images, words, or analyze video. This intensive process often requires hefty resources in the data center that can leverage many different forms of compute, such as GPUs, FPGAs, and ASICs. Movidius provides its Fathom Neural Compute Stick to bring limited deep learning capabilities to embedded devices.

Inference consists of using the trained application to conduct tasks based on its learnings, such as identifying objects. The new third-generation Movidius X processor aims to make inference more accessible to edge devices like drones, cameras, robots, and VR and AR devices, among others. Combining vision processing with AI capabilities should enable a vastly expanded set of applications.

The diminutive 16nm VPU (Vision Processing Unit) SoC includes vision accelerators, a Neural Compute Engine, imaging accelerators, and 16 SHAVE vector processors paired with a CPU in one heterogeneous package. The combination of units provides a total of up to 4 TOPS (Trillion Operations Per Second) of performance within a slim 1.5W power envelope.

Movidius' Neural Compute Engine is a fixed-function hardware block that accelerates Deep Neural Network (DNN) inferences to more than 1 TOPS. Movidius claims the engine provides industry-leading performance per Watt, which is critical for the low-power design. Memory throughput can be a gating factor, so Movidius employs 2.5MB of on-chip memory connected to the Intelligent Memory Fabric to provide up to 450GB/s of bandwidth. The Movidius 2 featured 2MB of on-chip memory, but we aren't privy to its memory bandwidth specifications. In either case, all data movement between components incurs higher power consumption, so additional local memory capacity helps ensure a low power envelope.

The Myriad X is available in two chip packages that provide different memory accommodations. The MA2085 comes without on-package memory but exposes an interface for external memory. The MA2485 supports 4Gbit of in-package LPDDR4 memory, which is a notable step up from the LPDDR3 supported on the previous-generation model.

Compared to its predecessor, Movidius claims the improved memory subsystem powers up to 10X the peak floating-point computational throughput when the processor is running multiple neural

Twenty hardware-accelerated Enhanced Vision Accelerators decouple some processes, such as optical flow and stereo depth, from the primary compute engine. The stereo depth block can simultaneously process dual 720p camera inputs at up to 180Hz, or six 720p inputs at 60Hz.

Movidius 2 featured 12 SHAVE (Streaming Hybrid Architecture Vector Engine) cores optimized for computer vision workloads, which increases to 16 cores with the Myriad X. The processor supports up to eight HD camera inputs and can process up to 700 million pixels per second. Sixteen programmable 128-bit VLIW Vector processors complement the other units to boost image signal processing performance.

The SoC employs 16 MIPI lanes that support up to eight HD RGB sensors. Hardware encoders support 4k resolutions at 30Hz (H.264/H.265) and 60Hz (M/JPEG). Other connectivity options include the ubiquitous PCIe 3.0 and USB 3.1 interfaces. The PCIe interface allows vendors to incorporate several VPUs in a single device.

Movidius provides the Myriad Development Kit (MDK) for programming. It includes the requisite dev tools, frameworks, and APIs.

The Movidius X joins Intel's growing arsenal of AI-centric solutions, such as Xilinx FPGAs, Nervana ASICs, and Xeon Phi products. Movidius hasn't shared pricing information, but we'll update as more information becomes available.

© 2025 Zeon Technology